Take your business to the next level with Azure – Microsoft's cloud service.

Azure is designed help you build, manage, and deploy applications with ease. It supports an array of programming languages, databases, operating systems, and devices, giving you the freedom to leverage the tools and technology you trust.

It seamlessly integrates with existing infrastructure and, unlike on-premises architecture, you only pay for the capacity you use. This gives you the flexibility to rapidly scale up (or down) to support business demands. And with built-in tooling and reliable backup and recovery, it's easy to understand why 95% of the Fortune 500 choose Azure to drive their business forward.

Learn more about Azure and Core BTS' cloud migration and management services below.

Why Choose Microsoft Azure?

Industry leading security, privacy, and compliance

Distributed in-memory application cache system

Persistent and durable storage in the cloud

Standards-based service for identity and access control

Ensure Business Continuity & Security

Azure Site Recovery

Stay ahead of potential business disruptions by running your applications in Azure. Easy-to-deploy, Azure Site Recovery reduces infrastructure costs so you can keep your business operational during scheduled or unscheduled outages.

Azure Backup

When data has been corrupted or lost, leverage Azure’s built-in backup solution to restore your data and keep your business moving.

Azure Migration

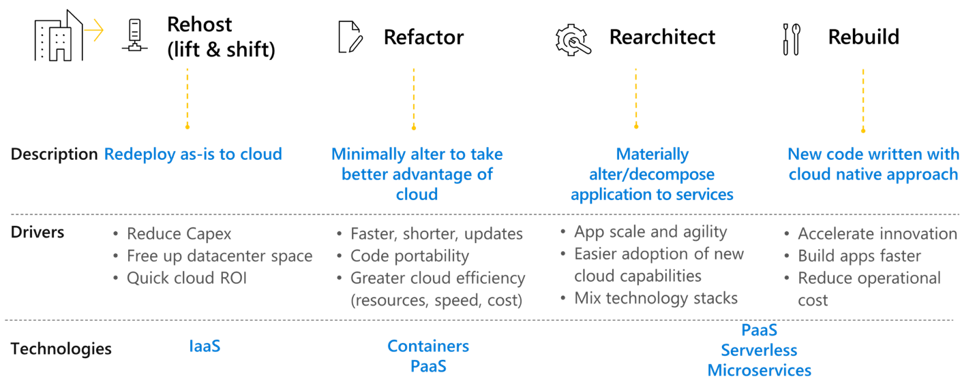

Cloud adoption is a journey, not a singular event. Through a multi-stage approach, we can help you enable a strong network and foundation before migrating to the Microsoft Azure cloud platform. We leverage our cloud framework to migrate mission-critical workloads to Azure while executing on your long-term term cloud strategy. Learn more.

Azure Managed Services

Through our Azure Managed Services, we ensure your environment stays agile, secure, and cost-optimized. We take care of the day-to-day cloud operations so that you can focus on strategic business initiatives. Learn more.

Azure Expert MSP

Microsoft’s Azure Expert MSP program was designed to help clients discover and engage highly capable partners for their most complex cloud projects. Azure Expert MSPs have invested in the people, process, and technology needed to harness deep Azure knowledge and service capabilities. They have also demonstrated their ability to deliver consistent, repeatable managed services on Azure.

Core BTS is proud to be an Azure Expert MSP and deliver innovative cloud solutions for clients in every stage of their cloud journey.

Capture the Full Benefits of the Cloud

A move to the cloud can help you streamline operations, increase agility, and achieve competitive advantage. It can also create added complexity and cost. Through our cloud managed services, you can ensure your environment stays agile, secure, and cost-optimized. Let us handle the day-to-day so that you can focus on strategic business initiatives.